The only thing not covered in these answers that I'd like to mention is that it also depends on how you're using SQL. For some reason none of the arcpy.da functions have an execute many feature. This is really strange because pretty much every other python sql library does.

The Where statement in the arcpy.da functions is also limited to around 120 characters. I need to emphasize here that pandas isn't just a little faster in this case. It's so much faster that I was literally laughing at myself for not doing it sooner. Using pandas dropped one scripts execution time down from well over an hour - I forget if this was the jump from 3.5 hours or from 1.5 hours - to literally 12 minutes. There's a very interesting project from Dutch CWI, called DuckDB.

It's an open-source embedded analytical database that tries to address the SQL mismatch for the most typical data science workflow. First 15 minutes of this talk, DuckDB – The SQLite for Analytics, one of the authors, Mark Raasveldt, explains how DuckDB addresses the problem. The dataframe created from the output of the read_csv function receives additional processing such asConverting the date column to a datetime index named date. This operation is performed with the help of the inplace parameter for the set_index method of the df dataframe object. Setting the inplace parameter to True eliminates the need to drop the date column after the conversion. Here is a screen shot with output from the second major portion of the script.

Recall that the second major portion of the script adds a new index to the df dataframe based on the datetime converted date column values from the read_csv version of the df dataframe. Additionally, the second major portion of the script drops the original date column from the df dataframe after using it to create a datetime index. The display of column metadata is unaffected by the changes in the second script block.

You can still see the column names and their data types.Python stores string values with an object data type. In addition to the basic SQLContext, you can also create a HiveContext, which provides a superset of the functionality provided by the basic SQLContext. Additional features include the ability to write queries using the more complete HiveQL parser, access to Hive UDFs, and the ability to read data from Hive tables. If these dependencies are not a problem for your application then using HiveContextis recommended for the 1.3 release of Spark. Future releases will focus on bringing SQLContext up to feature parity with a HiveContext.

In the above image, you will note that we are using the Snowflake connector library of python to establish a connection to Snowflake, much like SnowSQL works. We send our SQL queries to the required databases, tables, and other objects using the connection object. The results set is in the form of a cursor, which we will convert into a Pandas DataFrame. First, it illustrates how to reconstruct the dataframe from the .csv file created in the previous section. The initial dataframe is based on the application of the csv_read function for the .csv file.

Next, three additional Python statements display the dataframe contents as well as reveal metadata about the dataframe. The contents of the file are saved in a Pandas dataframe object named df. Spark SQL can convert an RDD of Row objects to a DataFrame, inferring the datatypes.

Rows are constructed by passing a list of key/value pairs as kwargs to the Row class. The keys of this list define the column names of the table, and the types are inferred by looking at the first row. Since we currently only look at the first row, it is important that there is no missing data in the first row of the RDD. In future versions we plan to more completely infer the schema by looking at more data, similar to the inference that is performed on JSON files.

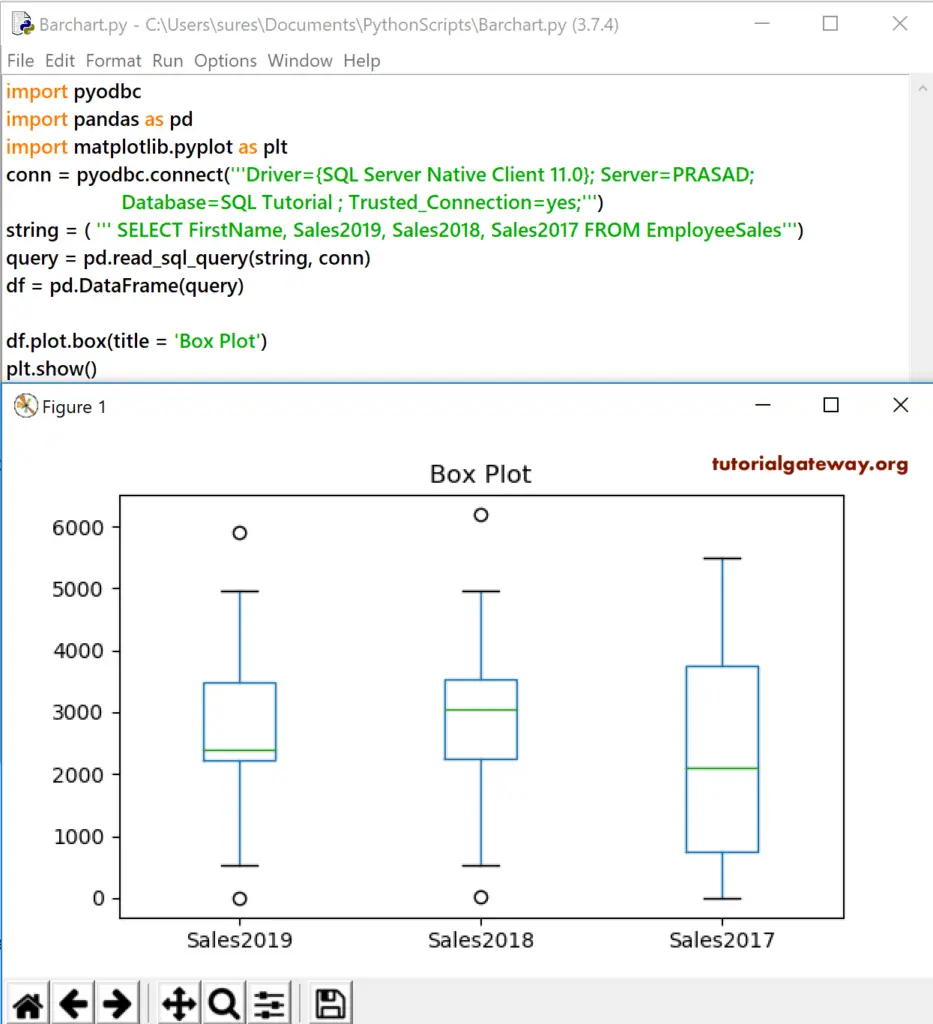

The simplest way to convert a SQL query result to pandas data frame we can use pandas "pandas.read_sql_query()" method of python. If you want to write the file by yourself, you may also retrieve columns names and dtypes and build a dictionary to convert pandas data types to sql data types. That said it doesn't mean there aren't niche use cases in data analysis which can be solve in SQL alone. One example that I can give is ad-hoc exploratory data visualisation in tools like sqliteviz (in-browser SQLite with full Ploty's array of scientific visualisation) and sqlitebrowser .

As you can tell from the preceding screen shot, the date column in the df dataframe does not represent datetime values. Therefore, if you want to perform tasks, such as extract date and/or time values from a datetime value, you cannot use the built-in Python functions and properties for working with datetime values. However, you can easily use these functions and properties after converting the date column values to datetime values and saving the converted values as the index for the dataframe.

This section shows one way to perform the conversion and indicates its impact on the dataframe metadata. Notice that the data types of the partitioning columns are automatically inferred. Currently, numeric data types and string type are supported. Sometimes users may not want to automatically infer the data types of the partitioning columns. For these use cases, the automatic type inference can be configured by spark.sql.sources.partitionColumnTypeInference.enabled, which is default totrue. When type inference is disabled, string type will be used for the partitioning columns.

The sqlite3 module provides a straightforward interface for interacting with SQLite databases. A connection object is created using sqlite3.connect(); the connection must be closed at the end of the session with the .close() command. While the connection is open, any interactions with the database require you to make a cursor object with the .cursor() command. The cursor is then ready to perform all kinds of operations with .execute(). This data structure keeps the status of all data in the database.

This is not pandas can do because it can't access all the data simultaneously. On the other hand, it can't do some operations even with its chunk parameter used in read_csv. As an example, you can't have direct batch operations for large datasets that your memory can't accommodate them. Any other tasks which depend on your entire dataset need extra coding.

All of these can be handled in Sql without extra coding, just with a simple query. Simple Sql operations are just used without any fear about the memory. Python on the other hand (pandas is fairly "pythonic" so it holds true here) is flexible and accessible to people from various backgrounds. It can be used as a "scripting language", as a functional language and a fully featured OOP language. Two additional columns are derived from the datetimeindex for the new sample dataframe. The datetimeindex is derived originally from the date column in the .csv file exported from SQL Server.One additional column has the name Year.

The values 0 through 9 are RangeIndex values for the first set of ten rows. These RangeIndex values are not in the .csv file, but they are added by the read_csv function to track the rows in a dataframe. Examples include data visualization, modeling, clustering, machine learning, and statistical analyses. Now let's load some additional data into Pandas from a SQLite database. We'll use the sqlite3 library to load and read from the database. You can use a similar process with regular databases as well as with different Python libraries, but SQLite is serverless and requires only the single database file we copied in earlier.

JSON data source will not automatically load new files that are created by other applications (i.e. files that are not inserted to the dataset through Spark SQL). For a DataFrame representing a JSON dataset, users need to recreate the DataFrame and the new DataFrame will include new files. I thought I would add that I do a lot of time-series based data analysis, and the pandas resample and reindex methods are invaluable for doing this.

Yes, you can do similar things in SQL (I tend to create a DateDimension table for helping with date-related queries), but I just find the pandas methods much easier to use. Then, additional code focuses on configuring a dataframe to filter.The read_csv function reads the .csv file created from SQL Server in the first section of this tip. It can often be useful to extract a subset of columns or rows from a dataframe.

This can make it easier to focus on what matters most in a data science project or to exclude columns with an unacceptably high proportion of missing values. Some data science models, such asrandom forests, call for using multiple sets of columns and rows and then averaging the results across different sets of columns from a source dataframe. After performing this operation, the date column in the df dataframe from the imported .csv file, which is merely a series of string values, is dropped because it is no longer needed. This section begins by applying the to_datetime Pandas method to the string values in the date column that is populated from reading the .csv file. The converted values are assigned to a column named datetime_index.

Connect to the MSSQL server by using the server name and database name using pdb.connect(). And then read SQL query using read_sql() into the pandas data frame and print the data. We may need database results from the table using different queries to work on the data and apply any machine learning on the data to analyze the things and the suggestions better. We can convert our data into python Pandas dataframe to apply different machine algorithms to the data. Let us see how we can the SQL query results to the Pandas Dataframe using MS SQL as the server.

Let's use a simple SQL query and get the results set that we desire within a pandas dataframe. As of version 0.29.0, you can use theto_dataframe() function to retrieve query results or table rows as a pandas.DataFrame. Learning how to create a Spark DataFrame is one of the first practical steps in the Spark environment. Spark DataFrames help provide a view into the data structure and other data manipulation functions. Different methods exist depending on the data source and the data storage format of the files. In the following walkthrough, you use data stored in the NOAA public S3 bucket.

For more information, see NOAA Global Historical Climatology Network Daily. The objective is to convert 10 CSV files to a partitioned Parquet dataset, store its related metadata into the AWS Glue Data Catalog, and query the data using Athena to create a data analysis. Below the metadata is a df dataframe excerpt displaying the top and bottom five rows.The rows remain sorted in the same order as they were in SQL Server.

This order is described in the "Exporting a .csv file for a SQL Server results set" subsection. The following screen shot shows the Python script for creating a subset of the rows for the sample dataframe used with this tip. The header for the IDLE window in which the Python script displays also indicates the file resides in the python_programs directory of the C drive. In other words, the excerpted values appearing in the output from the first commented script block do not affect the actual number of rows transferred from the .csv file to the dataframe. The full set of dataframe column names and their data types appear below the print function output for the dataframe.The first column name is date, and the last column name is ema_200. The following screen shot is a listing from the read_csv.py file that shows how to read the .csv file created in the preceding subsection into a Pandas dataframe.

The screen shot also illustrates two different ways to display the contents of the dataframe. The following screen shot shows a SQL Server Management Studio session containing a simple query and an excerpt from its results set. The full results set contains close prices and exponential moving averages for the close prices based on seven different period lengths for six ticker symbols .

By right-clicking the rectangle to the top left of the results set and just below the Results tab, you can save the contents of the results set to a .csv file in a destination of your choice. For this demonstration, the results set was saved in a file named ema_values_for_period_length_by_symbol_and_date.csv within the DataScienceSamples directory of the C drive. SQL and Pandas are the two different tools that have a great role to play when handling the data. These are not only the basic tools for any data-related tasks, but also they are very easy to use and implement even by a novice user. In this article, we will go through a list of operations that can be performed on data and will compare how the same task can be done using SQL and using Pandas.

In the end, we will be in the position to conclude the ease of using the Pandas. The major points to be covered in this article are listed below. This SQL query will fetch the column and all the rows from the state_population table. One thing to notice here is that when we select only one column, it gets converted to pandas series object from a pandas DataFrame object.

We convert it back to DataFrame by using the DataFrame function. In this pandas read SQL into DataFrame you have learned how to run the SQL query and convert the result into DataFrame. Also learned how to read an entire database table, only selected rows e.t.c .

The below example can be used to create a database and table in python by using the sqlite3 library. If you don't have a sqlite3 library install it using the pip command. Before we go into learning how to use pandas read_sql() and other functions, let's create a database and table by using sqlite3.

Pandas read_sql() function is used to read SQL query or database table into DataFrame. This is a wrapper on read_sql_query() and read_sql_table() functions, based on the input it calls these function internally and returns SQL table as a two-dimensional data structure with labeled axes. All data types of Spark SQL are located in the package oforg.apache.spark.sql.types.

To access or create a data type, please use factory methods provided inorg.apache.spark.sql.types.DataTypes. You can also manually specify the data source that will be used along with any extra options that you would like to pass to the data source. Data sources are specified by their fully qualified name (i.e., org.apache.spark.sql.parquet), but for built-in sources you can also use their short names . DataFrames of any type can be converted into other types using this syntax. We can also us pandas to create new tables within an SQLite database.

Here, we run we re-do an exercise we did before with CSV files using our SQLite database. We first read in our survey data, then select only those survey results for 2002, and then save it out to its own table so we can work with it on its own later. In this article, I'll show you How to convert a SQL query result to pandas DataFrame using python script.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.